True depth 3d scanner

3D TrueDepth Camera Scan on the App Store

Description

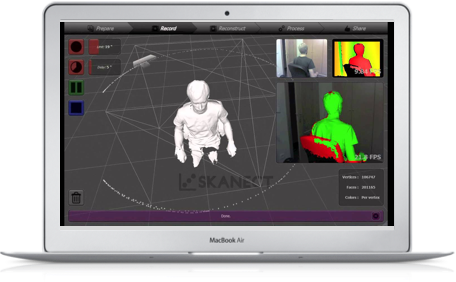

Turn your device into a professional 3D scanner with our 3D TrueDepth Camera Scan app. Easily and quickly transform any real object into a 3D scan. Export 3D scans in OBJ, STL, PLY formats or view in AR! 3D scans have never been so easy! Install our application and enjoy new opportunities!

IMPORTANT NOTICE: App requires the TrueDepth camera, only available on the Phone X, Xs, Xr, Xs Max, iPhone 11, iPhone 11 Pro, iPhone 11 Pro Max, iPhone 12, iPhone 12 Pro, iPhone 12 Pro Max.

Subscription Details:

- Payment will be charged to your App Store Account at confirmation of purchase.

- Your subscription will automatically renew unless auto- renew is turned off at least 24 hours before the end of the current subscription period.

- Your account will be charged for renewal within 24 hours prior to the end of the current subscription period. Automatic renewals will cost the same price you were originally charged for the subscription.

- Manage or cancel your subscription from your user settings in the App Store at any time after purchasing.

- Any unused portion of a free trial will be forfeited if you purchase a subscription.

Privacy Policy: https://sites.google.com/view/3dtruedepthcamerascan/home

Terms of Use: https://sites.google.com/view/3d-truedepth-camera-scan-terms/home

Version 1.1

SDK Update

The developer, Tahir Akbar, indicated that the app’s privacy practices may include handling of data as described below. For more information, see the developer’s privacy policy.

Data Not Linked to You

The following data may be collected but it is not linked to your identity:

- Identifiers

- Diagnostics

Privacy practices may vary based on, for example, the features you use or your age. Learn More

Learn More

Information

- Seller

- Tahir Akbar

- Size

- 40.8 MB

- Category

- Utilities

- Age Rating

- 4+

- Copyright

- © 2021 Tahir Akbar

- Price

- Free

- Developer Website

- App Support

- Privacy Policy

More By This Developer

You Might Also Like

Apple’s 3D scanner will change everything | by Alex Barrera

This story was first published at The Aleph Report. If you want to read the latest reports, please subscribe to our newsletter and our Twitter.

If you want to read the latest reports, please subscribe to our newsletter and our Twitter.

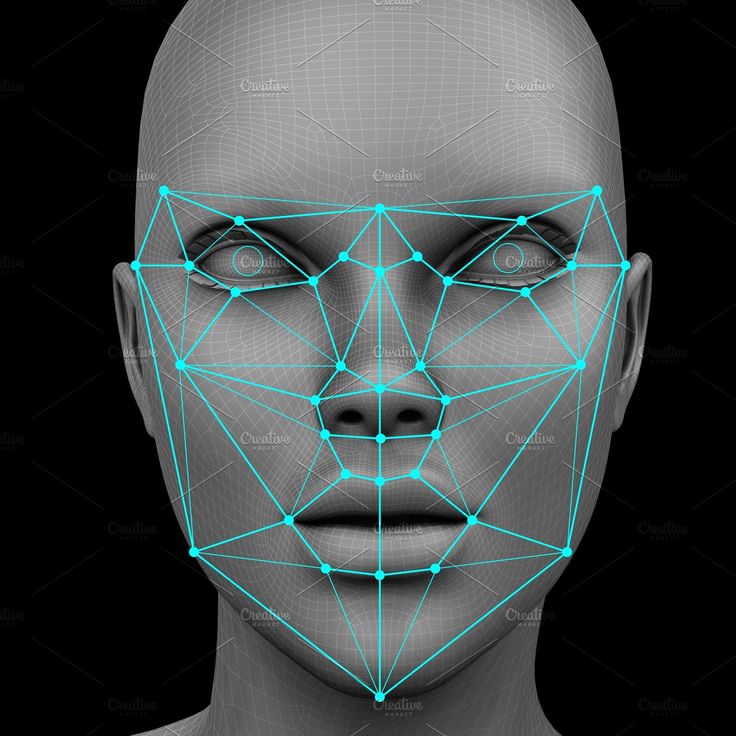

On September, Apple announced the iPhone X. A new feature, called FaceID, will allow the unlocking the phone through facial recognition. This single feature will have profound implications across other industries.

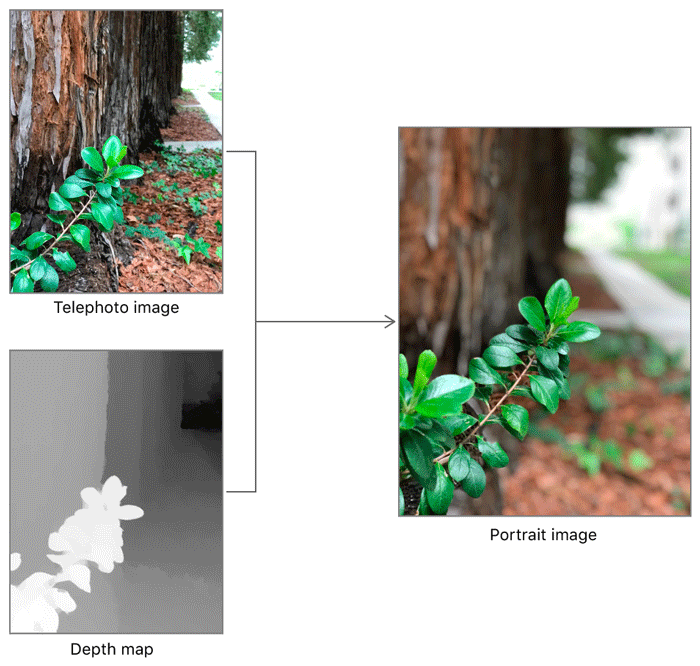

Apple dubbed the camera TrueDepth. This sensor allows the phone not only to perceive 2D but to gather depth information to form a 3D map.

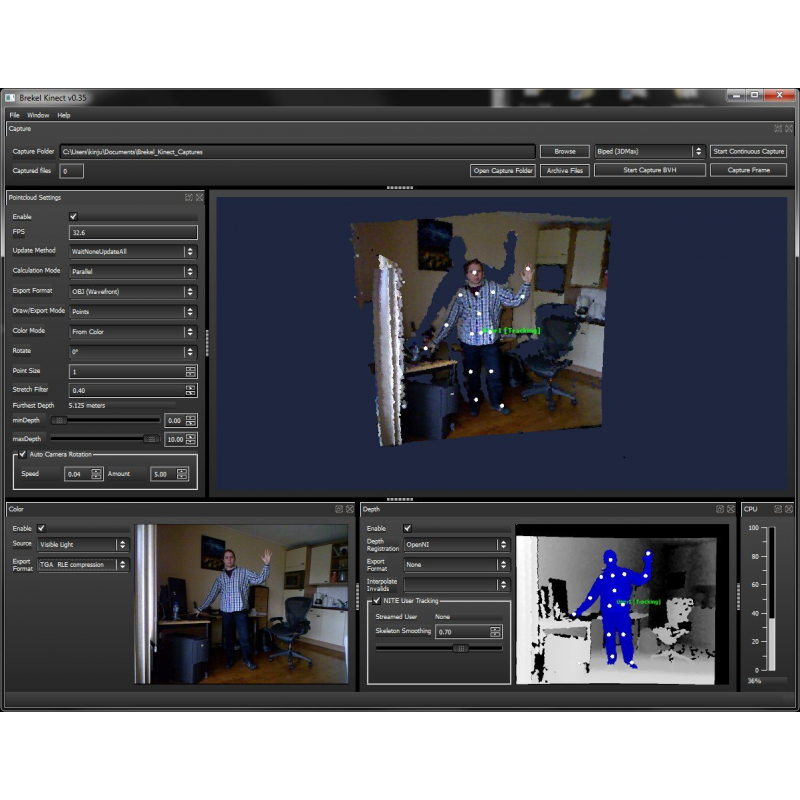

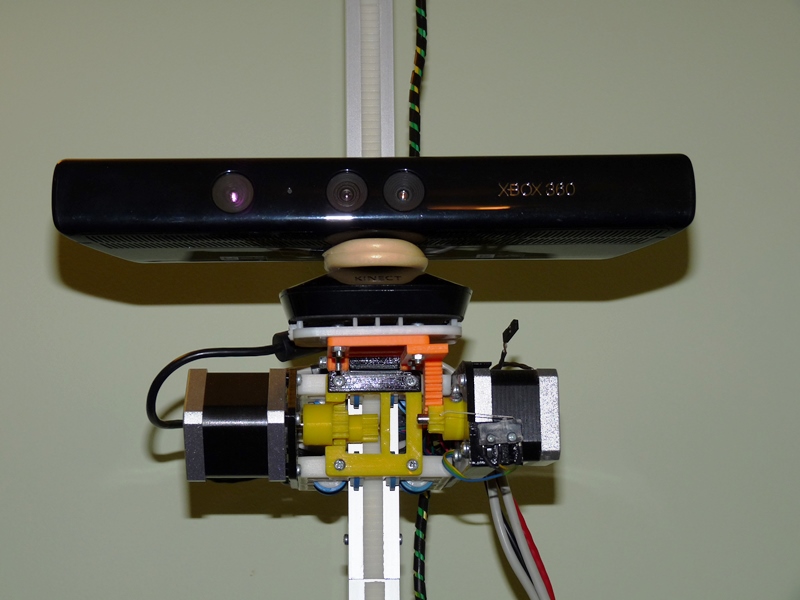

It’s, in a nutshell, a 3D scanner, right in your palm. It’s a Kinect embedded in your phone. And I bring up Kinect because it’s the same technology. In November of 2013, Apple acquired the Israeli 3D sensing company PrimeSense, the technology behind Kinect, for 360 million dollars.

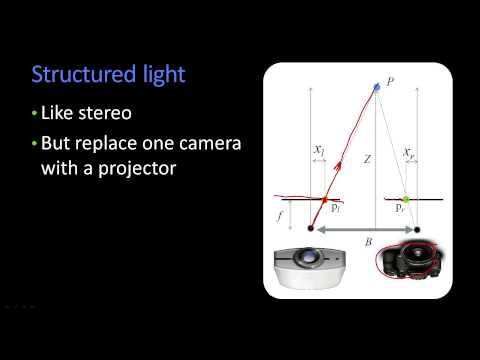

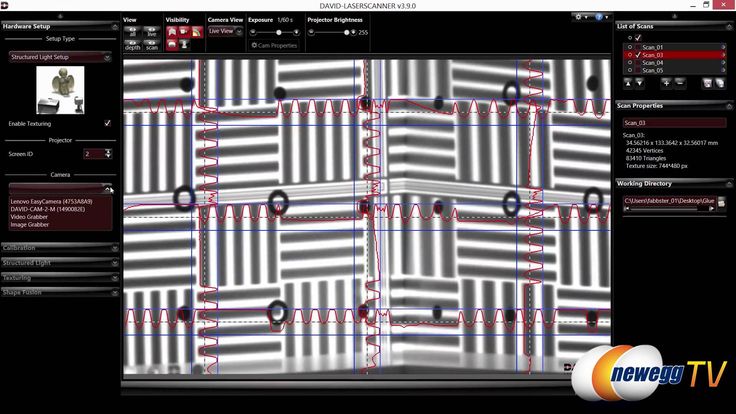

PrimeSense’s technology uses what’s called structured light 3D scanning. It’s one of the three main techniques employed to do 3D scanning and depth sensing.

Structured light 3D scanning is the perfect method to embed on the phone. It doesn’t yield a massive sensing range (between 40 centimeters to 3.5 meters), but it provides the highest depth accuracy.

Depth accuracy is critical for Apple. The sensor is the grounding stone of Face ID, their facial authentication system (PDF). If you’re going to use faces to unlock your life, you better be sure you have a high accuracy to avoid face-fraud.

This technology, though, has been around for a while. PrimeSense pioneered the first commercial depth-sensing camera with Kinect in November of 2010.

Two years later, in early 2012, Intel started developing their deep sensing technology. First called Perceptual Computing; it later renamed to Intel RealSense.

On September of 2013, Occipital releases their Structure Sensor campaign on Kickstarter. They raised 1.3 million dollars, making it one of the top campaigns of the day.

But despite the field heating up, the uses of the technology remained either desktop-bonded or gadget-bonded.

In 2010, PrimeSense was already trying to miniaturize their sensor so it could run on a smartphone. It would take them seven years (and Apple’s resources) to finally be able to deliver on that promise in the form of the iPhone X.

The feat is quite spectacular. Apple managed to fit the Kinect on a smartphone, while keeping the energy-hungry sensors in check, beating everyone, including Intel and Qualcomm, to the market.

Several technological and behavioral changes are converging in the field. On one side, we see a massive improvement of computer vision systems. Deep Learning algorithms are pushing the performance of such systems to human-expertize parity. In return, such systems are now available as cloud-based commodities.

At the same time, games like Pokemon Go, are building the core Augmented Reality (AR) behavior in users. Now, more than ever, people are comfortable using their phones to merge reality with AR.

On top of that, the current departure from text-based interfaces is beginning to change the behavior of users. Voice-only is turning into a reality, and it’s a matter of time until video-only becomes a norm too.

Having a depth sensor in a phone changes everything. What before took specialized hardware, is now accessible everywhere. What before fixed us to a specific location, is now mobile.

The convergence of user behavior, increased reliance on computer vision systems, and mobile 3D sensing technology is a killer.

The exciting thing is, Apple will turn depth-sensing technology into a commodity. Apple’s TrueFace though will limit many people’s new developments. These people will, in turn, look at more powerful sensors like Lidar or Qualcomm’s new depth sensors, catalyzing the whole ecosystem.

In other words, Apple is putting depth sensors on the table for everyone to admire. They’re doing that, not with technology, but with a killer application of the technology, Face ID. They’re showing the way to more powerful apps.

They’re doing that, not with technology, but with a killer application of the technology, Face ID. They’re showing the way to more powerful apps.

It’s hard to predict what will this combo be able to produce. Here are some ideas, but I’m sure we’ll see some surprising apps soon enough.

Photo editing and avatar galoreI’ll start with the obvious. Your Instagram feed and stories will become better than ever. Photos taken with the iPhone X will be able to render different depth focuses. TrueDepth will also enable users to create hyper-realistic masks and image gestures.

Gesture-based controlsWhile these interfaces have been around for a long time, Apple just moved them to the world’s platform, mobile. It’s a matter of time before we see these apps cropping in our smart-TVs or other surfaces.

Security and biometricsApple already demonstrated Face-based authentication. I guess this will be massively adopted everywhere. From banks to airport controls, to office or home access systems.

From banks to airport controls, to office or home access systems.

This technology could also aid in KYC systems, fraud prevention or speedier identity checks. An attractive field of application could also be forensics. The last one is something I thought about after the Boston bombings of 2013.

Could you stitch all the user-generated video of that day? Could you create a reliable 3D space investigators could use to analyze what happened?. Depth sensing cameras everywhere will make this a reality.

Navigational enhancesDepth sensing is also critical in several navigational domains. From Augmented Reality (AR) and Virtual Reality (VR), all the way to Autonomous Vehicles mapping necessities. Having a portable device that can enhance 3D maps could be a huge benefit for many self-driving car companies.

On top of that, layer the raising of drone-based logistics, and it’s complicated navigational issues. Right now many systems use Lidar sensors, but it’s easy to imagine how they’ll handle depth sensors.

For example, they could be used to identify where the recipient is located and verify their identity.

People tracking for adsPeople-tracking technologies are being used by authorities for “security” purposes. With a small twist, we could also use depth-sensing technology to do customer tracking and efficient ad delivery in the physical world. Adtech is going to have a field day with this tech.

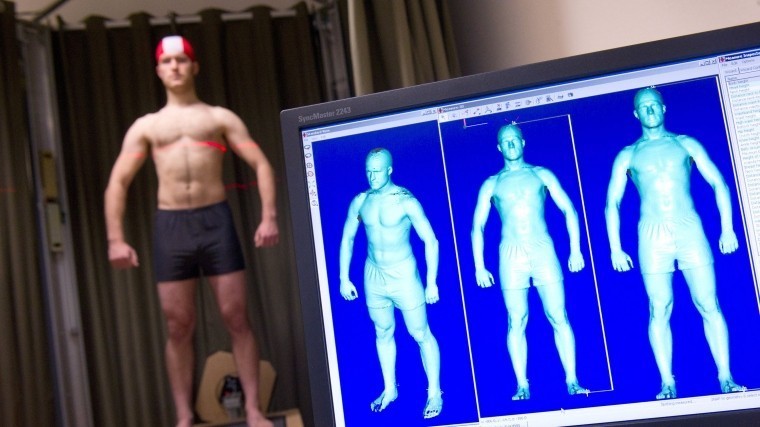

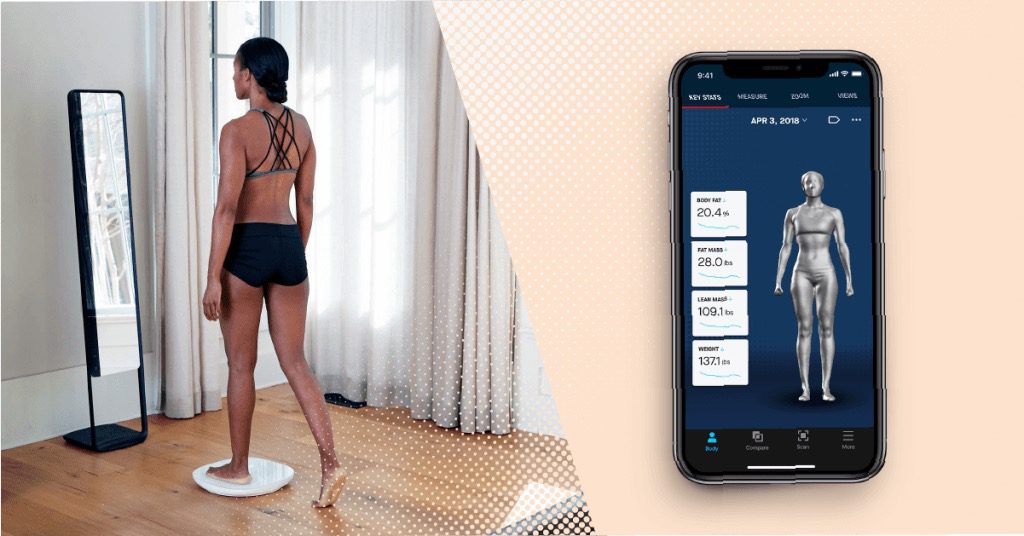

3D scanningThis is an obvious one. I would only add, Apple made 3D scanning, not only portable but ubiquitous. It’s a matter of time before we see it used in many different environments. Some uses might include active maintenance, Industrial IoT or the Real Estate industry.

Predictive healthOne of those spaces this technology will revolutionize is eHealth and predictive medicine. The user will now be able to scan physical maladies and send them to their doctors.

In the same vein, it will also affect the fitness space, allowing for weight and muscle mass tracking.

Depth-sensing will have immense repercussions for computer vision systems. It allows them to add a third dimension (depth) and speed up object recognition.

This will bring real image tracking and detection to our phones. We should expect better real-time product detection (and buying), or improvements in fashion-related products.

Mobile journalismLast but not least, I’m intrigued by how journalists will use such technology. In the same way forensic teams might employ these systems, journalism can also benefit from it.

Mobile journalism (MoJo) is already an emerging trend but could be significantly enhanced by the use of 3D videos and depth recreations.

We are on the verge of seeing an explosion of apps using this technology. Apple has already the ARKit on the market. The Android ecosystem is moving to adopt the ARCore one and finally delivering on Project Tango.

Any organization out there should devote some time to think how this technology can bring a new product to life. From Real Estate to Construction to Media, depth sensors are going to change how we interface with physical information.

From Real Estate to Construction to Media, depth sensors are going to change how we interface with physical information.

Even if you don’t work with physical information, it’s worth thinking how this technology enables us to bridge both realities.

This space is going to move fast. Now that Apple has open the floodgates, all the Android ecosystem will follow. I suspect that in less than two-three years we’ll see a robust set of apps in this space.

“According to the new note seen by MacRumors, inquiries by Android vendors into 3D-sensing tech have at least tripled since Apple unveiled its TrueDepth.”

Following the app ecosystem, we’ll see a crop of devices embedding depth sensor technology beyond the phone, and it will eventually be all around us.

If you enjoyed this post, please share. And don’t forget to subscribe to our weekly newsletter and to follow us on Twitter!

All about 3D scanners: from varieties to applications

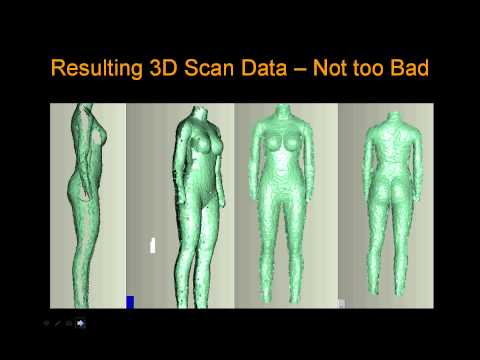

The 3D scanner is a special device that analyzes a specific physical object or space in order to obtain data on the shape of an object and, if possible, its appearance (for example , about color). The collected data is then used to create a digital three-dimensional model of this object.

The collected data is then used to create a digital three-dimensional model of this object.

To create 3D-scanner allows several technologies at once, differing from each other in certain advantages, disadvantages, as well as cost. In addition, there are some restrictions on the objects that can be digitized. In particular, there are difficulties with objects that are shiny, transparent or have mirror surfaces.

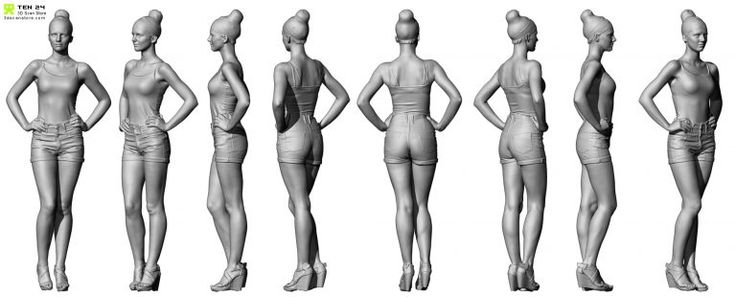

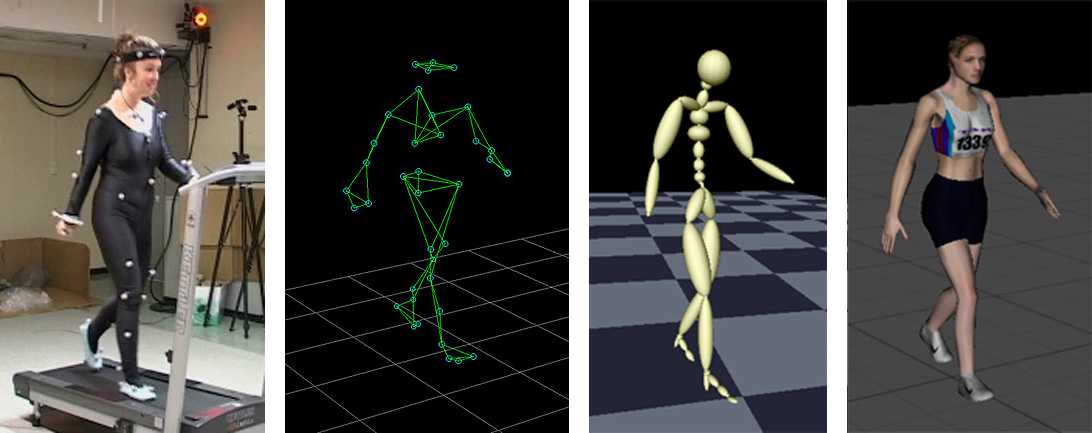

Don't forget that 3D data collection is also important for other applications. So, they are needed in the entertainment industry to create films and video games. Also, this technology is in demand in industrial design, orthopedics and prosthetics, reverse engineering, prototyping, as well as for quality control, inspection and documentation of cultural artifacts.

Functionality

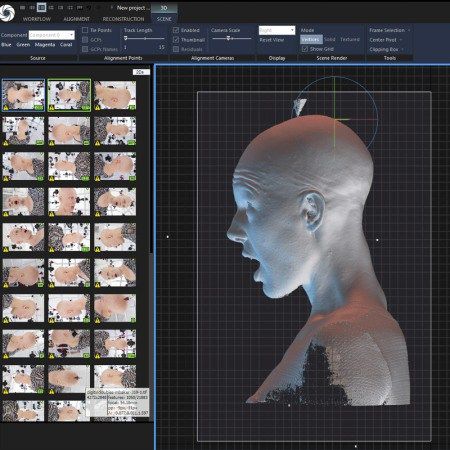

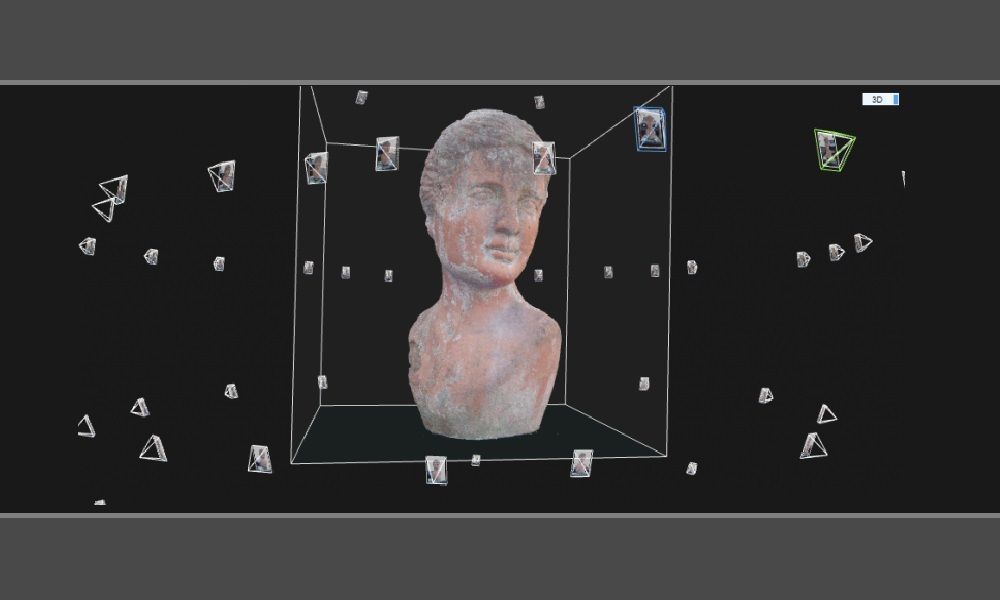

The purpose of the 3D Scanner is to create a point cloud of geometric patterns on the surface of an object. These points can then be extrapolated to recreate the shape of the object (a process called reconstruction). If color data were obtained, then the color of the reconstructed surface can also be determined.

If color data were obtained, then the color of the reconstructed surface can also be determined.

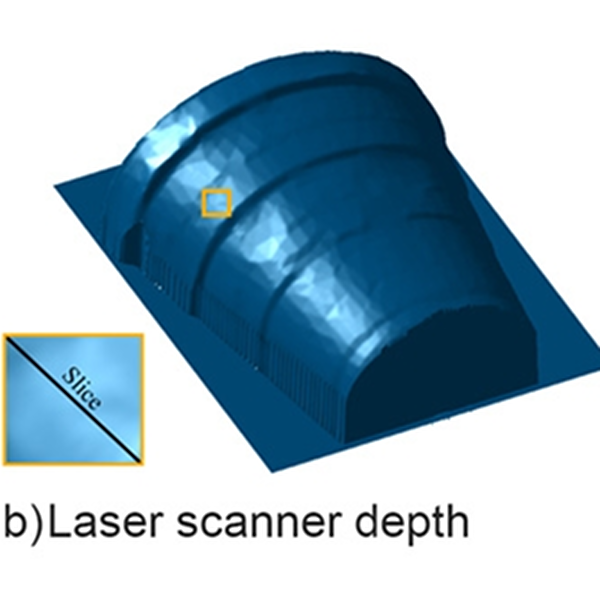

3D scanners are a bit like regular cameras. In particular, they have a cone-shaped field of view, and they can only receive information from surfaces that have not been darkened. The difference between these two devices is that the camera transmits only information about the color of the surface that fell into its field of view, but the 3D scanner collects information about the distances on the surface, which is also in its field of view. Thus the "picture" obtained with of the 3D scanner, describes the distance to the surface at each point in the image. This allows you to determine the position of each point in the picture in 3 planes at once.

In most cases, one scan is not enough to create a complete model of the object. Several such operations are required. As a rule, a decent number of scans from different directions will be needed in order to obtain information about all sides of the object. All scan results must be normalized to a common coordinate system, a process called image referencing or alignment, before a complete model is created. This whole procedure from a simple map with distances to a full-fledged model is called a 3D scanning pipeline.

All scan results must be normalized to a common coordinate system, a process called image referencing or alignment, before a complete model is created. This whole procedure from a simple map with distances to a full-fledged model is called a 3D scanning pipeline.

Technology

There are several technologies for digitally scanning a mold and creating a 3D model of an object. However, a special classification has been developed that divides 3D scanners into 2 types: contact and non-contact. In turn, non-contact 3D scanners can be further divided into 2 groups - active and passive. Several technologies can fall under these categories of scanning devices.

Coordinated-measuring machine with two fixed mutually perpendicular measuring hands

Contact 3D scanners

Contact 3D-scanners Explore (probes) the object directly through physical contact, while the subject itself is expected to explode itself on a precision surface plate, ground and polished to a certain degree of surface roughness. If the scanned object is uneven or cannot lie stably on a horizontal surface, then a special vise will hold it.

If the scanned object is uneven or cannot lie stably on a horizontal surface, then a special vise will hold it.

The scanner mechanism comes in three different forms:

- Carriage with a fixed measuring arm positioned perpendicularly, and measurement along the axes occurs while the arm slides along the carriage. This system is optimal for flat or regular convex curved surfaces.

- Fixed component manipulator with high precision angle sensors. The location of the end of the measuring arm entails complex mathematical calculations regarding the angle of rotation of the wrist joint, as well as the angle of rotation of each of the joints of the arm. This mechanism is ideal for probing recesses or interior spaces with a small inlet.

- Simultaneous use of the previous two methods. For example, a manipulator can be combined with a carriage, which allows you to get 3D data from large objects that have internal cavities or overlapping surfaces.

The

The

CMM (coordinate measuring machine) is a prime example of the contact 3D scanner . They are used mainly in manufacturing and can be ultra-precise. The disadvantages of CMM include the need for direct contact with the surface of the object. Therefore, it is possible to change the object or even damage it. This is very important if thin or valuable items such as historical artifacts are being scanned. Another disadvantage of CMM over other scanning methods is slowness. Moving the measuring arm with the probe in place can be very slow. The fastest result of CMM operation does not exceed a few hundred hertz. At the same time, optical systems, for example, a laser scanner, can operate from 10 to 500 kHz.

Another example is hand-held measuring probes used to digitize clay models for computer animation.

The Lidar device is used to scan buildings, rocks, etc., which makes it possible to create 3D models of them. The Lidar laser beam can be used in a wide range: its head rotates horizontally, and the mirror moves vertically. The laser beam itself is used to measure the distance to the first object in its path.

The laser beam itself is used to measure the distance to the first object in its path.

Non-contact active scanners

Active scanners use certain types of radiation or just light and scan an object through the reflection of light or the passage of radiation through an object or medium. These devices use light, ultrasound, or x-rays.

Time-of-Flight Scanners

Time-of-Flight Laser Scanner The 3D scanner is an active scanner that uses a laser beam to examine an object. This type of scanner is based on a time-of-flight laser range finder. In turn, the laser rangefinder determines the distance to the surface of the object, based on the time of flight of the laser back and forth. The laser itself is used to create a pulse of light, while the detector measures the time until the light is reflected. Given that the speed of light (c) is a constant value, knowing the time of flight of the beam back and forth, you can determine the distance over which the light has moved, it will be twice the distance between the scanner and the surface of the object. If (t) is the round-trip flight time of the laser beam, then the distance will be (c*t\2). Laser beam time-of-flight accuracy of the 3D scanner depends on how accurately we can measure time (t) itself: 3.3 picoseconds (approximately) is needed for the laser to travel 1 millimeter.

If (t) is the round-trip flight time of the laser beam, then the distance will be (c*t\2). Laser beam time-of-flight accuracy of the 3D scanner depends on how accurately we can measure time (t) itself: 3.3 picoseconds (approximately) is needed for the laser to travel 1 millimeter.

The laser distance meter determines the distance of only one point in a given direction. Therefore, the device scans its entire field of view in separate points at a time, while changing the direction of scanning. You can change the direction of the laser rangefinder either by rotating the device itself, or using a system of rotating mirrors. The latter method is often used, because it is much faster, more accurate, and also easier to handle. For example, time-of-flight 3D scanners can measure distance from 10,000 to 100,000 points in one second.

TOF devices are also available in 2D configuration. Basically, this applies to time-of-flight cameras. Triangulation scanners Two positions of the object are shown.

A point cloud is generated by triangulation and a laser stripe.

Triangulation laser scanners The 3D scanners are also active scanners that use a laser beam to probe an object. Like the time-of-flight 3D scanners, triangulation devices send a laser to the scanned object, and a separate camera captures the location of the point where the laser hit. Depending on how far the laser travels across the surface, the dot appears at different locations in the camera's field of view. This technology is called triangulation because the laser dot, the camera and the laser emitter itself form a kind of triangle. The length of one side of this triangle is known - the distance between the camera and the laser emitter. The angle of the laser emitter is also known. But the camera angle can be determined by the location of the laser dot in the field of view of the camera. These 3 indicators completely determine the shape and size of the triangle and indicate the location of the corner of the laser point. In most cases, to speed up the process of obtaining data, a laser strip is used instead of a laser dot. Thus, the National Research Council of Canada was among the first scientific organizations that developed the basics of triangulation laser scanning technology back in 1978 year.

In most cases, to speed up the process of obtaining data, a laser strip is used instead of a laser dot. Thus, the National Research Council of Canada was among the first scientific organizations that developed the basics of triangulation laser scanning technology back in 1978 year.

Advantages and disadvantages of

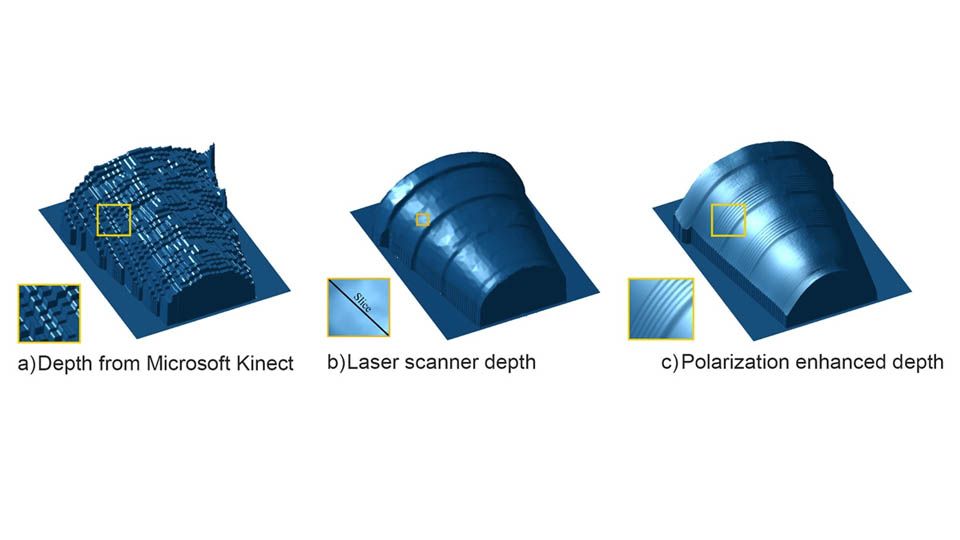

scanners Both time-of-flight and triangulation scanners have their own strengths and weaknesses, which determines their choice for each specific situation. The advantage of time-of-flight devices is that they are optimally suited for operation over very long distances up to several kilometers. They are ideal for scanning buildings or geographic features. At the same time, their disadvantages include measurement accuracy. After all, the speed of light is quite high, so when calculating the time it takes for the beam to overcome the distance to and from the object, some flaws (up to 1 mm) are possible. And this makes the scan results approximate.

As for triangulation rangefinders, the situation is exactly the opposite. Their range is only a few meters, but the accuracy is relatively high. Such devices can measure distance with an accuracy of tens of micrometers.

The study of the edge of an object negatively affects the accuracy of the TOF scanners. The laser pulse is sent one, and is reflected from two places at once. The coordinates are calculated based on the position of the scanner itself, and the average value of the two reflections of the laser beam is taken. This causes the point to be defined in the wrong place. When using scanners with high resolution, the chances that the laser beam hits the exact edge of the object increase, but noise will appear behind the edge, which will negatively affect the scan results. Scanners with a small beam can solve the edge scanning problem, but they have limited range, so the beam width will exceed the distance. There is also special software that allows the scanner to perceive only the first reflection of the beam, while ignoring the second.

At 10,000 dots per second, low resolution scanners can do the job within seconds. But for scanners with high resolution, you need to do several million operations, which will take minutes. It should be borne in mind that the data may be distorted if the object or the scanner moves. So, each point is fixed at a certain point in time in a certain place. If the object or scanner moves in space, then the scan results will be false. That's why it's so important to mount both the object and the scanner on a fixed platform and keep the possibility of vibration to a minimum. Therefore, scanning objects in motion is practically impossible. Recently, however, there has been active research on how to compensate for the effect of vibration on data corruption.

It is also worth considering that when scanning in one position for a long time, a slight movement of the scanner may occur due to temperature changes. If the scanner is mounted on a tripod and one side of the scanner is exposed to strong sunlight, then the tripod will expand and the scan data will gradually distort from one side to the other. However, some laser scanners have built-in compensators that counteract any movement of the scanner during operation.

However, some laser scanners have built-in compensators that counteract any movement of the scanner during operation.

Conoscopic holography

In the conoscopic system, a laser beam is projected onto the surface of an object, after which the beam is reflected along the same path, but through a conoscopic crystal, and is projected onto a CCD (charge-coupled device). The result is a diffraction pattern from which frequency analysis can be used to determine the distance to the surface of an object. The main advantage of conoscopic holography is that only one beam path is needed to measure the distance, which makes it possible to determine, for example, the depth of a small hole.

Handheld laser scanners

Handheld laser scanners create a 3D image using the triangulation principle described above. A laser beam or stripe is projected onto an object from a hand-held emitter, and a sensor (often a CCD or position-sensitive detector) measures the distance to the surface of the object. The data is collected relative to the internal coordinate system and therefore, to obtain results, if the scanner is in motion, the position of the device must be accurately determined. This can be done using basic features on the scanned surface (adhesive reflective elements or natural features) or using the external tracking method. The latter method often takes the form of a laser tracker (providing a position sensor) with a built-in camera (to determine the orientation of the scanner). You can also use photogrammetry, provided by 3 cameras, which gives the scanner six degrees of freedom (the ability to make geometric movements in three-dimensional space). Both techniques typically use infrared LEDs connected to the scanner. They are observed by cameras through filters that ensure the stability of ambient lighting (reflecting light from different surfaces).

The data is collected relative to the internal coordinate system and therefore, to obtain results, if the scanner is in motion, the position of the device must be accurately determined. This can be done using basic features on the scanned surface (adhesive reflective elements or natural features) or using the external tracking method. The latter method often takes the form of a laser tracker (providing a position sensor) with a built-in camera (to determine the orientation of the scanner). You can also use photogrammetry, provided by 3 cameras, which gives the scanner six degrees of freedom (the ability to make geometric movements in three-dimensional space). Both techniques typically use infrared LEDs connected to the scanner. They are observed by cameras through filters that ensure the stability of ambient lighting (reflecting light from different surfaces).

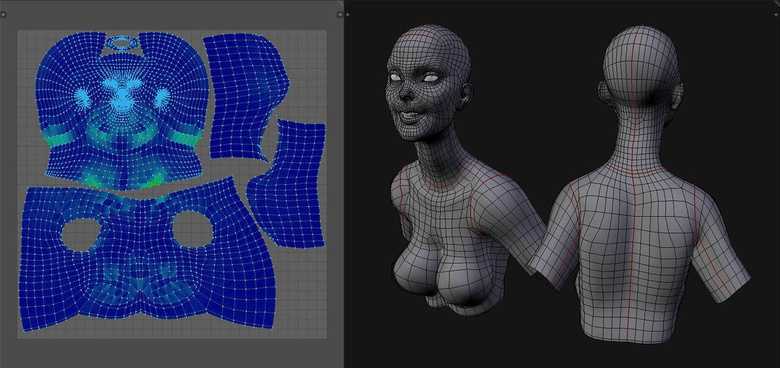

Scan data is collected by a computer and recorded as points in 3D space, which after processing are converted into a triangulated grid. The computer-aided design system then creates a model using a non-uniform rational B-spline, NURBS (a special mathematical form for creating curves and surfaces). Handheld laser scanners can combine this data with passive visible light sensors that capture surface texture and color to create or reverse engineer a complete 3D Models .

The computer-aided design system then creates a model using a non-uniform rational B-spline, NURBS (a special mathematical form for creating curves and surfaces). Handheld laser scanners can combine this data with passive visible light sensors that capture surface texture and color to create or reverse engineer a complete 3D Models .

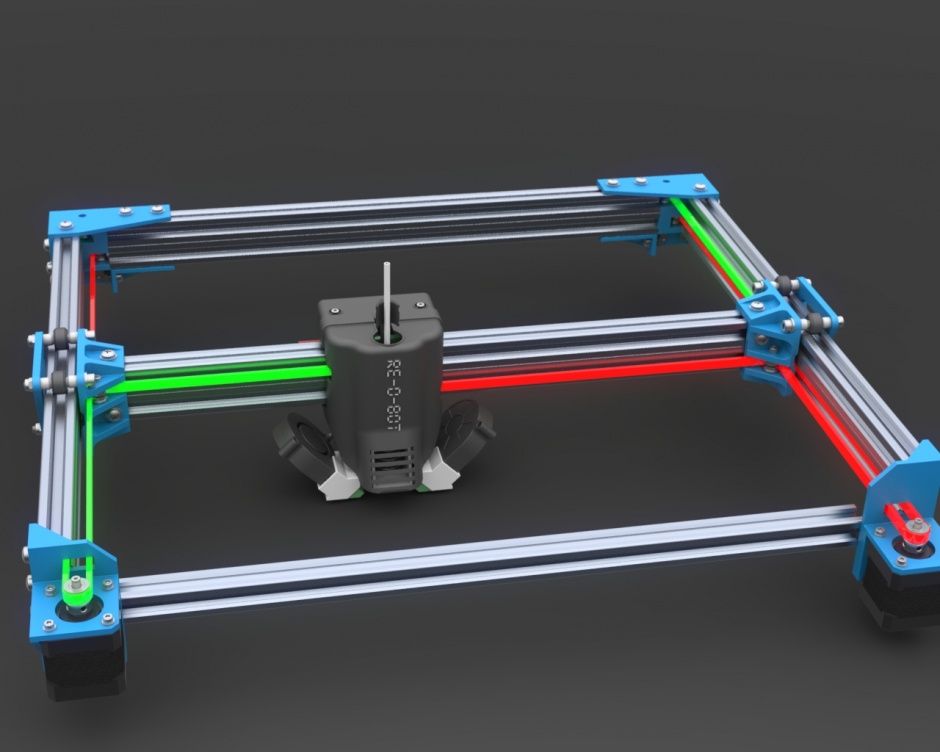

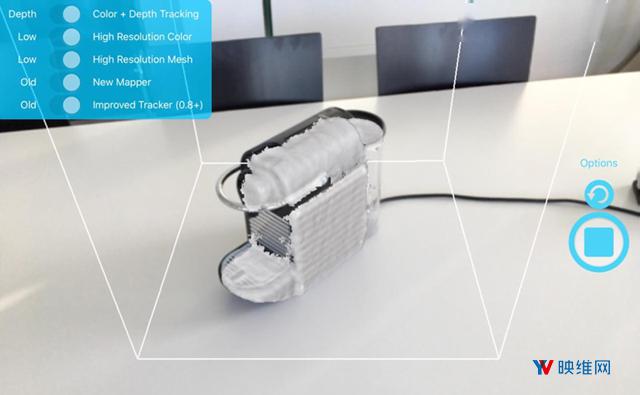

Structured light

3D scanners, working on structured light technology, represent a projection of a light grid directly onto an object, deformation of this pattern and is a model of the scanned object. The grid is projected onto the object using a liquid crystal projector or other constant light source. A camera positioned just to the side of the projector captures the shape of the network and calculates the distance to each point in the field of view.

Structured light scanning is still an active area of research, with quite a few research papers devoted to it each year. Ideal maps are also recognized as useful as structured light patterns that can solve matching problems and allow errors to be corrected as well as detected.

The advantage of the Structured Light 3D Scanners is their speed and accuracy. Instead of scanning one point at a time, structured scanners scan several points at the same time or the entire field of view at once. Scanning the entire field of view takes a fraction of a second, and the generated profiles are more accurate than laser triangulations. This completely solves the problem of data corruption caused by motion. In addition, some existing systems are capable of scanning even moving objects in real time. For example, the VisionMaster, a 3D scanning system, has a 5-megapixel camera, so each frame contains 5 million dots.

Real-time scanners use digital edge projection and a phase-shifting technique (one of the techniques for using structured light) to capture, reconstruct and create a high-density computer model of dynamically changing objects (such as facial expressions) at 40 frames per second. A new type of scanner has recently been created. Various models can be used in this system. The frame rate for capturing and processing data reaches 120 frames per second. This scanner can also process individual surfaces. For example, 2 moving hands. Using the binary defocusing method, the shooting speed can reach hundreds or even thousands of frames per second.

The frame rate for capturing and processing data reaches 120 frames per second. This scanner can also process individual surfaces. For example, 2 moving hands. Using the binary defocusing method, the shooting speed can reach hundreds or even thousands of frames per second.

Modulated light

When using the 3D scanners based on modulated light, the light beam directed at the object is constantly changing. Often the change of light passes along a sinusoid. The camera captures the reflected light and determines the distance to the object, taking into account the path that the light beam has traveled. Modulated light allows the scanner to ignore light from sources other than the laser, thus avoiding interference.

Volumetric techniques

Medical

Computed tomography (CT) is a special medical imaging technique that creates a series of two-dimensional images of an object, a large three-dimensional image of the internal space. Magnetic resonance imaging works on a similar principle - another imaging technique in medicine, which is distinguished by a more contrast image of the soft tissues of the body than CT. Therefore, MRI is used to scan the brain, the musculoskeletal system, the cardiovascular system, and to search for oncology. These techniques produce volumetric voxel models that can be rendered, modified, and transformed into a traditional 3D surface using isosurface extraction algorithms.

Magnetic resonance imaging works on a similar principle - another imaging technique in medicine, which is distinguished by a more contrast image of the soft tissues of the body than CT. Therefore, MRI is used to scan the brain, the musculoskeletal system, the cardiovascular system, and to search for oncology. These techniques produce volumetric voxel models that can be rendered, modified, and transformed into a traditional 3D surface using isosurface extraction algorithms.

Production

Although MRI, CT or microtomography are more widely used in medicine, they are also actively used in other areas to obtain a digital model of an object and its environment. This is important, for example, for non-destructive testing of materials, reverse engineering or the study of biological and paleontological samples.

Non-contact passive scanners

Passive scanners do not emit light, instead they use reflected light from the environment. Most scanners of this type are designed to detect visible light, which is the most accessible form of ambient radiation. Other types of radiation, such as infrared, may also be involved. Passive scanning methods are relatively cheap, because in most cases they do not need special equipment, a conventional digital camera is enough.

Most scanners of this type are designed to detect visible light, which is the most accessible form of ambient radiation. Other types of radiation, such as infrared, may also be involved. Passive scanning methods are relatively cheap, because in most cases they do not need special equipment, a conventional digital camera is enough.

Stereoscopic systems involve the use of 2 video cameras located in different places, but in the same direction. By analyzing the differences in the images of each camera, you can determine the distance to each point in the image. This method is similar in principle to human stereoscopic vision.

Photometric systems typically use a single camera that captures multiple frames in all lighting conditions. These methods attempt to transform the object model in order to reconstruct the surface for each pixel.

Silhouette techniques use contours from successive photographs of a three-dimensional object against a contrasting background. These silhouettes are extruded and transformed to get the visible skin of the object. However, this method does not allow you to scan the recesses in the object (for example, the inner cavity of the bowl).

However, this method does not allow you to scan the recesses in the object (for example, the inner cavity of the bowl).

There are other methods that are based on the fact that the user himself discovers and identifies some features and shapes of the object, based on many different images of the object, which allow you to create an approximate model of this object. Such methods can be used to quickly create a three-dimensional model of objects of simple shapes, for example, a building. You can do this using one of the software applications: D-Sculptor, iModeller, Autodesk ImageModeler or PhotoModeler.

This 3D scan is based on the principles of photogrammetry. In addition, this technique is in some ways similar to panoramic photography, except that the photographs of the object are taken in three-dimensional space. Thus, it is possible to copy the object itself, rather than taking a series of photos from one point in three-dimensional space, which would lead to the reconstruction of the object's environment.

Reconstruction

From point clouds

The point clouds generated by the 3D Scanners can be directly used for measurement or visualization in architecture and engineering.

However, most applications use non-homogeneous rational B-spline, NURBS, or editable CAD models (also known as solid models) instead of polygonal 3D models.

- Polygon mesh models: In polygon representation shapes curved surfaces consist of many small flat surfaces with edges (a striking example is a ball in discotheques). Polygonal models are very in demand for visualization in the field of CAM - an automated system for technological preparation of production (for example, mechanical processing). At the same time, such models are quite « heavy" (accommodate a large amount of data) and are quite difficult to edit in this format. Reconstruction into a polygonal model involves searching and combining neighboring points with straight lines until a continuous surface is formed.

For this, you can use a number of paid and free programs (MeshLab, Kubit PointCloud for Au toCAD, 3D JRC Reconstructor, ImageModel, PolyWorks, Rapidform, Geomagic, Imageware, Rhino 3D, etc.).

For this, you can use a number of paid and free programs (MeshLab, Kubit PointCloud for Au toCAD, 3D JRC Reconstructor, ImageModel, PolyWorks, Rapidform, Geomagic, Imageware, Rhino 3D, etc.). - Surface models: This method represents the next level of sophistication in the field of modeling. It applies a set of curved surfaces that give your object its shape. It can be NURBS, T-Spline or other curved objects from the topology. Using NURBS converts, for example, a sphere to its mathematical equivalent. Some applications require manual processing of the model, but more advanced programs also offer automatic mode. This option is not only easier to use, but also provides the ability to modify the model when exporting to a computer-aided design system (CAD). Surface models are editable, but only in a sculptural way. Organic and artistic forms lend themselves well to modeling. Surface modeling is available in Rapidform, Geomagic, Rhino 3D, Maya, T Splines.

- 3D CAD Models: From an engineering and manufacturing perspective, this type of simulation is a full digitized form of a parametric CAD model.

After all, CAD is the industry's common "language" for describing, editing, and preserving the shape of an enterprise's assets. For example, in CAD, a sphere can be described by parametric functions that are easy to edit by changing their value (say, radius or center point).

After all, CAD is the industry's common "language" for describing, editing, and preserving the shape of an enterprise's assets. For example, in CAD, a sphere can be described by parametric functions that are easy to edit by changing their value (say, radius or center point).

These CAD models don't just describe the shell or shape of an object, but they also enable design intent (ie, critical features and their relationship to other features). An example of design intent that is not expressed in form would be the ribbed bolts of a brake drum, which should be concentric with the hole in the center of the drum. This nuance determines the sequence and method of creating a CAD model, so the engineer, taking into account these features, will develop bolts tied not to the outer diameter, but, on the contrary, to the center. Thus, to create such a CAD model, you need to correlate the shape of the object with the design intent.

There are several approaches to get a parametric CAD model. Some involve only exporting a NURBS surface, leaving the CAD engineer to complete the modeling (Geomagic, Imageware, Rhino 3D). Others use the scan data to create an editable and verifiable function model that can be fully imported into CAD with an intact fully functional tree, providing a complete fusion of shape and design intent of the CAD model (Geomagic, Rapidform). However, other CAD applications are powerful enough to manipulate a limited number of points or polygonal models in a CAD environment (CATIA, AutoCAD, Revit).

Some involve only exporting a NURBS surface, leaving the CAD engineer to complete the modeling (Geomagic, Imageware, Rhino 3D). Others use the scan data to create an editable and verifiable function model that can be fully imported into CAD with an intact fully functional tree, providing a complete fusion of shape and design intent of the CAD model (Geomagic, Rapidform). However, other CAD applications are powerful enough to manipulate a limited number of points or polygonal models in a CAD environment (CATIA, AutoCAD, Revit).

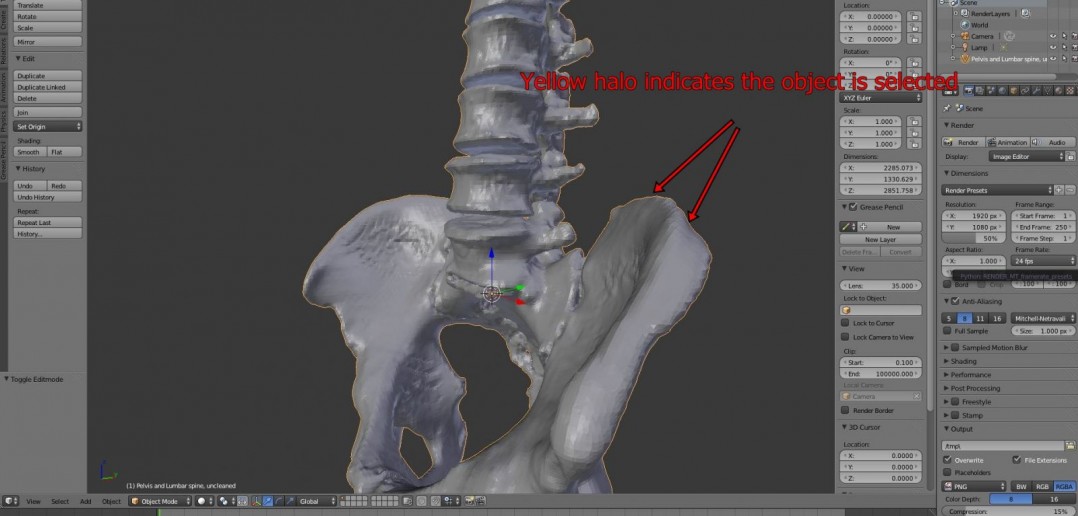

From the 2D slice set

3D reconstruction of the brain or eyeballs based on CT results is performed using DICOM images. Their peculiarity is that the areas on which air is displayed, or bones with a high density are made transparent, and the sections are superimposed in a free alignment interval. The outer ring of biomaterial surrounding the brain is made up of the soft tissues of the skin and muscles on the outside of the skull. All sections are made on a black background. Since they are simple 2D images, when added one-to-one when viewed, the borders of each slice disappear due to their zero thickness. Each DICOM image is a slice about 5 mm thick.

All sections are made on a black background. Since they are simple 2D images, when added one-to-one when viewed, the borders of each slice disappear due to their zero thickness. Each DICOM image is a slice about 5 mm thick.

CT, industrial CT, MRI or microCT scanners do not create a point cloud, but 2D slices (referred to as a “tomogram”) that are superimposed on each other, resulting in a kind of 3D model. There are several ways to do this, depending on the desired result:

- Volume rendering: Different parts of an object usually have different thresholds and grayscale densities. Based on this, a three-dimensional model can be freely designed and displayed on the screen. Several models can be made from different thresholds, allowing different colors to represent a specific part of an object. Volumetric rendering is most often used to render a scanned object.

- Image segmentation: When different structures have similar threshold or midtone values, it may not be possible to separate them simply by changing volume rendering parameters.

The solution to the problem will be segmentation - a manual or automatic procedure that will remove unnecessary structures from the image. Special programs that support image segmentation allow you to export segmented structures to CAD or STL format, which will allow you to continue working with them.

The solution to the problem will be segmentation - a manual or automatic procedure that will remove unnecessary structures from the image. Special programs that support image segmentation allow you to export segmented structures to CAD or STL format, which will allow you to continue working with them. - Meshing based on image analysis: When 3D image data (CFD and FEA) is used for computer analysis, simple data segmentation and meshing from a CAD file can be quite time consuming. In addition, some typical image data may not be inherently suitable for a complex topology. The solution lies in image analysis meshing, which is an automated process for generating an accurate and realistic geometric description of the scanned data.

Application

Material Handling and Manufacturing

3D Laser Scanning describes a general way to measure or scan a surface using laser technology. It is used in several areas at once, differing mainly in the power of the lasers that are used and the results of the scan itself. Low laser power is needed when the scanned surface should not be influenced, for example, if it only needs to be digitized. Confocal or 3D laser scanning are methods that provide information about the scanned surface. Another low power application involves a projection system that uses structured light. It is applied to solar panel plane metrology involving voltage calculation with a throughput of more than 2,000 plates per hour.

Low laser power is needed when the scanned surface should not be influenced, for example, if it only needs to be digitized. Confocal or 3D laser scanning are methods that provide information about the scanned surface. Another low power application involves a projection system that uses structured light. It is applied to solar panel plane metrology involving voltage calculation with a throughput of more than 2,000 plates per hour.

The laser power used for laser scanning of industrial equipment is 1W. The power level is typically 200mW or less.

Construction industry

- Robot control: laser scanner acts as the eye of the robot

- Executive drawings of bridges, industrial plants, monuments

- Documentation of Historic Sites

- Site modeling and layout

- Quality control

- Measurement of works

- Reconstruction of highways

- Marking an existing shape/state to identify structural changes after extreme events - earthquake, ship or truck impact, fire.

- Creation of GIS (Geographic Information System), maps and geomatics

- Scanning of subsurface in mines and karst voids

- Court records

Benefits of 3D scanning

Creating a 3D model by scanning has the following benefits:

- Makes working with complex parts and shapes more efficient

- Encourages product design when needed to add a part created by someone else.

- If CAD models become outdated, 3D scanning will provide an updated version

- Replaces missing or missing parts of

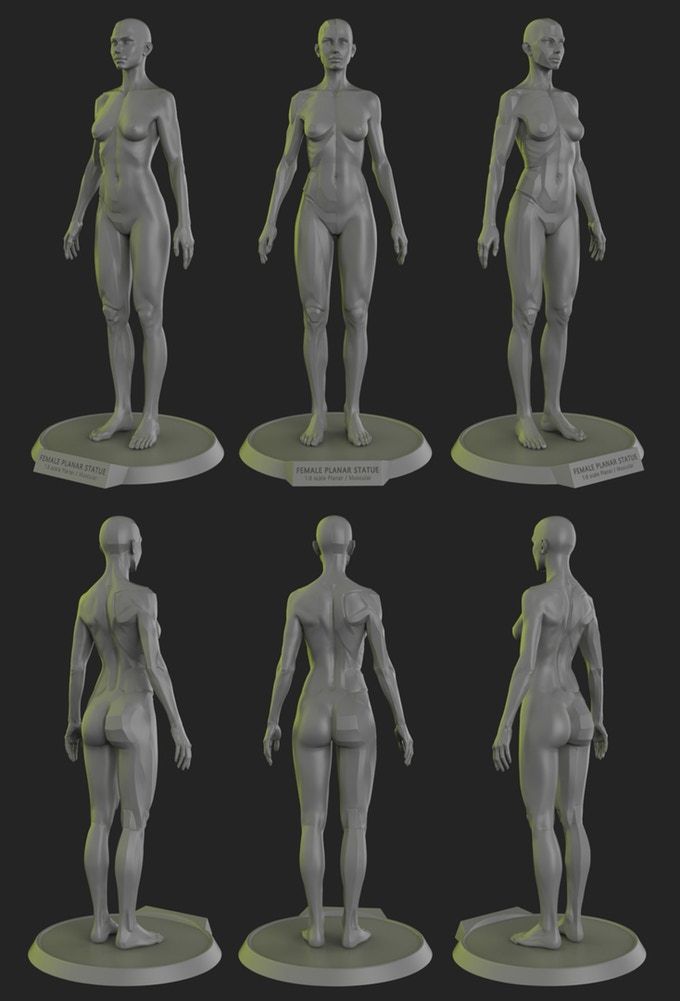

Entertainment

3D scanners are widely used in the entertainment industry to create 3D digital models in film and video games. If the model being created has a counterpart in the real world, then scanning will allow you to create a three-dimensional model much faster than developing the same model through 3D modeling. Quite often, artists first sculpt a physical model, which is then scanned to get a digital equivalent, instead of creating such a model on a computer.

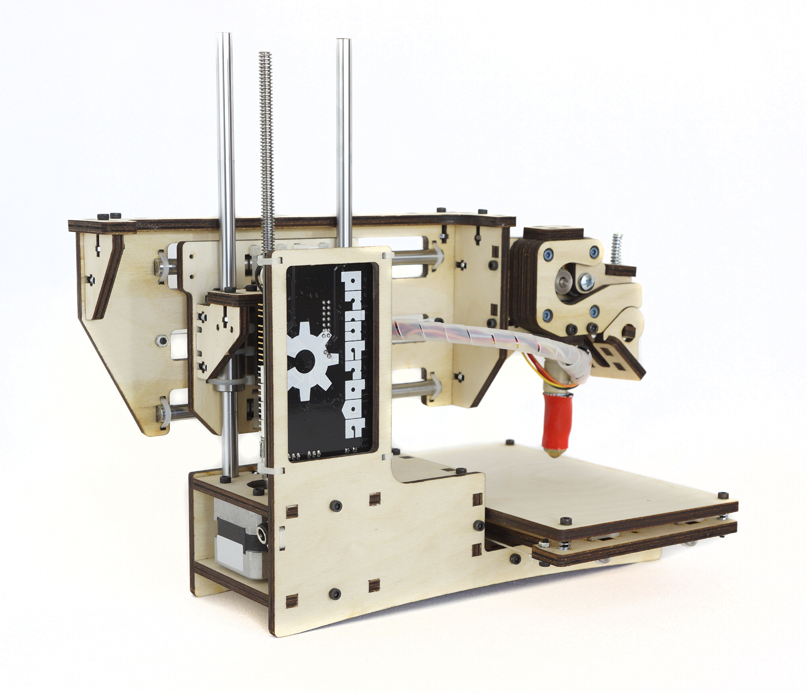

Reverse engineering

Reverse engineering of mechanical components requires a very accurate digital model of the objects to be recreated. This is a good alternative to converting many points of a digital model to a polygon mesh, using a set of NURBS flat and curved surfaces, or, ideally for mechanical components, creating a 3D CAD model. A 3D scanner can be used to digitize objects that freely change shape. As well as the prismatic configuration, for which a coordinate measuring machine is usually used. This will allow you to determine the simple dimensions of the prismatic model. This data is further processed by special programs for reverse engineering.

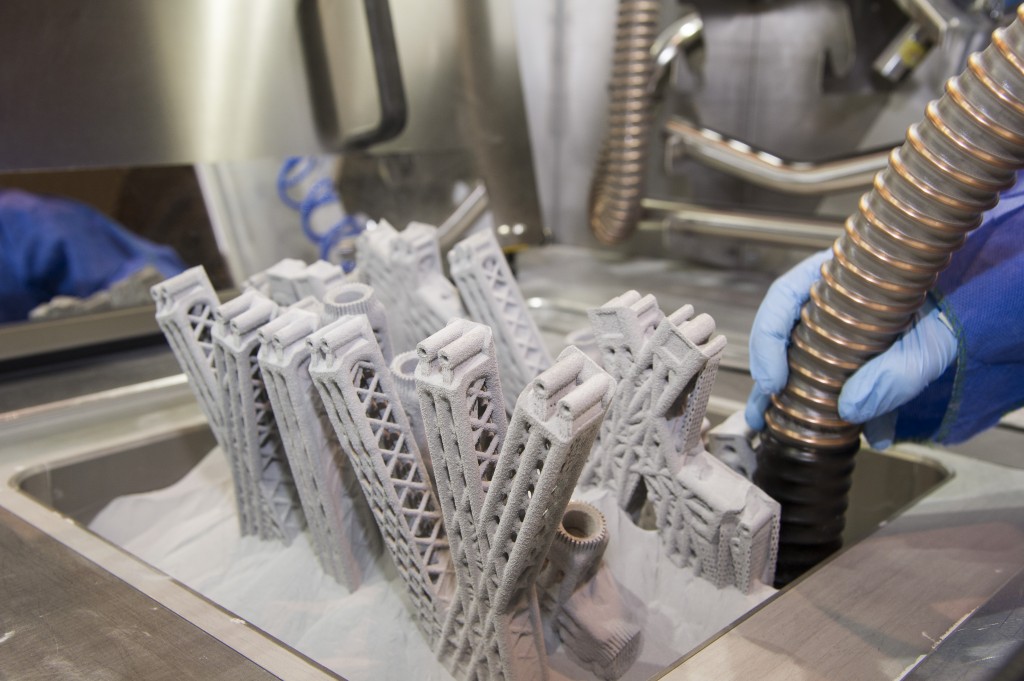

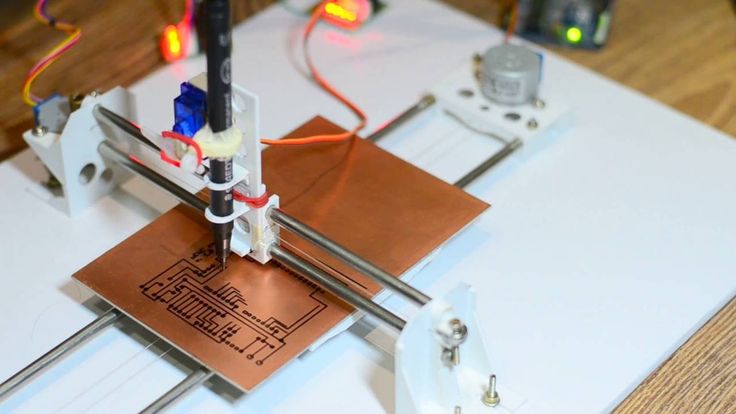

3D printing

3D scanners are also actively used in the field of 3D printing, as they allow you to create fairly accurate 3D models of various objects and surfaces in a short time, suitable for further refinement and printing. In this area, both contact and non-contact scanning methods are used, both methods have certain advantages.

Cultural heritage

An example of copying a real object through 3D scanning and 3D printing. There are many research projects that have been carried out using the scanning of historical sites and artifacts to document and analyze them. The combined use of 3D scanning and 3D printing makes it possible to replicate real objects without the use of a traditional plaster cast, which in many cases can damage a valuable or delicate cultural heritage artifact. The sculpture of the figure on the left was digitized using a 3D scanner, and the resulting data was converted in the MeshLab program. The resulting digital 3D model was printed using a rapid prototyping machine that allows you to create a real copy of the original object.

Michelangelo

There are many research projects that have been carried out using scanning of historical sites and artifacts to document and analyze them.

In 1999, 2 different research groups started scanning Michelangelo's statues. Stanford University, along with a team led by Mark Levoy, used a conventional laser triangulation scanner built by Cyberware specifically to scan Michelangelo's statues in Florence. In particular, the famous David, "Slaves" and 4 more statues from the Medici chapel. Scanning is performed with a dot density of 0.25 mm, sufficient to see the traces of Michelangelo's chisel. Such a detailed scan involves obtaining a huge amount of data (about 32 gigabytes). It took about 5 months to process them.

Around the same time, a research group from IBM was working, led by H. Raschmeyer and F. Bernardini. They were tasked with scanning the Florentine Pieta sculpture to obtain both geometric data and color information. The digital model obtained from a Stanford University scan was fully used in 2004 to further restore the statue.

Medical applications CAD/CAM

3D scanners are widely used in orthopedics and dentistry to create a 3D patient shape. Gradually, they replace the outdated gypsum technology. CAD/CAM software is used to create prostheses and implants.

Gradually, they replace the outdated gypsum technology. CAD/CAM software is used to create prostheses and implants.

Many dentistry uses CAD/CAM as well as 3D scanners to capture the 3D surface of a dentifrice (in vivo or in vitro) in order to create a digital model using CAD or CAM techniques (e.g. , for a CNC milling machine (computer numerical control), as well as a 3D printer). Such systems are designed to facilitate the process of 3D scanning of the drug in vivo with its further modeling (for example, for a crown, filling or inlay).

Quality assurance and industrial metrology

The digitization of real world objects is of great importance in various fields of application. 3D scanning is very actively used in industry to ensure product quality, for example, to measure geometric accuracy. Predominantly all industrial processes such as assembly are quite complex, they are also highly automated and are usually based on CAD (computer-aided design data). The problem is that the same degree of automation is required for quality assurance. A striking example is the automated assembly of modern cars, because they consist of many parts that must match exactly with each other.

The problem is that the same degree of automation is required for quality assurance. A striking example is the automated assembly of modern cars, because they consist of many parts that must match exactly with each other.

Optimum performance levels are guaranteed by quality assurance systems. Geometrical metal parts need special checking, because they must be of the correct size, fit together to ensure reliable operation.

In highly automated processes, the results of geometric measurements are transferred to machines that produce the corresponding objects. Due to friction and other mechanical processes, the digital model may differ slightly from the real object. In order to automatically capture and evaluate these deviations, the manufactured parts must be rescanned. For this, 3D scanners are used, which create a reference model with which the received data are compared.

The process of comparing 3D data and CAD model is called CAD comparison, and can be a useful method for determining mold and machine wear, final assembly accuracy, gap analysis, and the volumetric surface of a disassembled part. Currently laser triangulation scanners, structured light devices and contact scanning are the leading technologies used in industrial applications. Contact scanning methods, although they are the slowest, but the most accurate option.

Currently laser triangulation scanners, structured light devices and contact scanning are the leading technologies used in industrial applications. Contact scanning methods, although they are the slowest, but the most accurate option.

If you have a need for 3D scanning services and / or subsequent reverse engineering, please contact us at [email protected].

3D Laser Scanner (3D LIDAR) HOKUYO YVT-35LX-F0

3D Laser Scanner (3D LIDAR) HOKUYO YVT-35LX-F0About

Hokuyo

Japanese company Hokuyo manufactures laser scanners (also known as lidars), advanced optical sensors and optical communication devices. Hokuyo's laser scanners are well known...

Details→

3D laser scanners (3D-LiDAR) YVT-35LX allow you to realize almost continuous scanning of the surrounding space, regardless of whether the recognized objects are moving. In one frame, the scanner generates a cloud of 2590 points. Using the interlaced mode, you can increase the density of points in the cloud. The scanner is equipped with an accelerometer and a PPS input.

Using the interlaced mode, you can increase the density of points in the cloud. The scanner is equipped with an accelerometer and a PPS input.

To determine the distance, the scanner generates a pulsed laser beam using the TOF (time of flight) measuring principle. The scanner emits a laser beam in a wide 3D field, providing data on the height, width and depth of objects. Such information cannot be obtained using classic 2D scanners.

BENEFITS:

Wide field of view

The scanner has a measuring range of 210° horizontally and 40° vertically. The working range is 35 m at the front and approximately 14 m at the sides. For detailed information on the detection range in each direction, see the scanner data sheet.

Input signal PPS

When using a GPS signal, the PPS input resets the scanner's time stamp to eliminate a time offset error.

Interlace mode

The direction of the laser beam is gradually shifted in each cycle, creating a denser point cloud. You can increase the density in two planes independently - up to 20 times horizontally and 10 times vertically. At maximum density, this is called HD (High Density) mode.

You can increase the density in two planes independently - up to 20 times horizontally and 10 times vertically. At maximum density, this is called HD (High Density) mode.

Environmental resistance

The IP67 rated YVT-35LX can be used in a variety of environments, up to 100,000 lux, and is shock resistant up to 10G.

Multi-Echo Function

The scanner beam can generate multiple returns in the same direction when reflected from rain, dust, and fog. In this case, distance messages are received for each return.

When using the scanner outdoors, the Multi-echo function allows you to separate rain, dust and fog from targets and the housing cover, supporting up to 4 echoes (first, second, third and last echoes).

ATTENTION: the scanner processes up to 8 returns and reports only 4 of them

Areas of use:

- Self-propelled vehicles (AGV): Safe monitoring and terrain mapping

- Robotics: Recognition of surrounding objects

- Loaders: obstacle detection, pattern matching and height measurement

- Construction: determining the scope and depth of excavation, determining the profile of bulk soil

- Ports: crane collision avoidance

- Public places: counting people in shopping malls

- Entertainment: access control

YVT-35LX-F0 Laser Scanner Price:

6725.