3D printing and artificial intelligence

Using artificial intelligence to control digital manufacturing | MIT News

Scientists and engineers are constantly developing new materials with unique properties that can be used for 3D printing, but figuring out how to print with these materials can be a complex, costly conundrum.

Often, an expert operator must use manual trial-and-error — possibly making thousands of prints — to determine ideal parameters that consistently print a new material effectively. These parameters include printing speed and how much material the printer deposits.

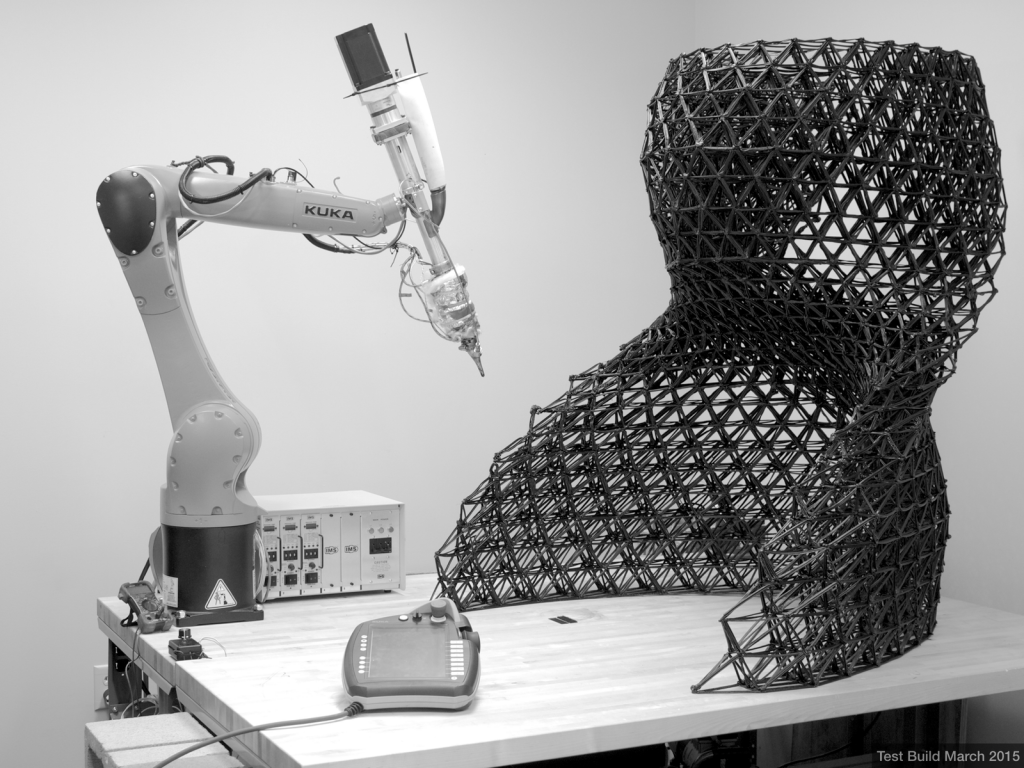

MIT researchers have now used artificial intelligence to streamline this procedure. They developed a machine-learning system that uses computer vision to watch the manufacturing process and then correct errors in how it handles the material in real-time.

They used simulations to teach a neural network how to adjust printing parameters to minimize error, and then applied that controller to a real 3D printer. Their system printed objects more accurately than all the other 3D printing controllers they compared it to.

The work avoids the prohibitively expensive process of printing thousands or millions of real objects to train the neural network. And it could enable engineers to more easily incorporate novel materials into their prints, which could help them develop objects with special electrical or chemical properties. It could also help technicians make adjustments to the printing process on-the-fly if material or environmental conditions change unexpectedly.

“This project is really the first demonstration of building a manufacturing system that uses machine learning to learn a complex control policy,” says senior author Wojciech Matusik, professor of electrical engineering and computer science at MIT who leads the Computational Design and Fabrication Group (CDFG) within the Computer Science and Artificial Intelligence Laboratory (CSAIL). “If you have manufacturing machines that are more intelligent, they can adapt to the changing environment in the workplace in real-time, to improve the yields or the accuracy of the system. You can squeeze more out of the machine.”

You can squeeze more out of the machine.”

The co-lead authors on the research are Mike Foshey, a mechanical engineer and project manager in the CDFG, and Michal Piovarci, a postdoc at the Institute of Science and Technology in Austria. MIT co-authors include Jie Xu, a graduate student in electrical engineering and computer science, and Timothy Erps, a former technical associate with the CDFG.

Picking parameters

Determining the ideal parameters of a digital manufacturing process can be one of the most expensive parts of the process because so much trial-and-error is required. And once a technician finds a combination that works well, those parameters are only ideal for one specific situation. She has little data on how the material will behave in other environments, on different hardware, or if a new batch exhibits different properties.

Using a machine-learning system is fraught with challenges, too. First, the researchers needed to measure what was happening on the printer in real-time.

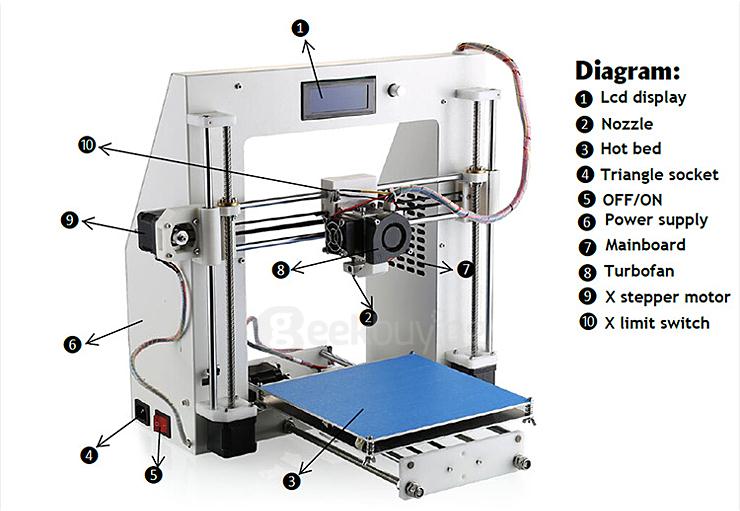

To do this, they developed a machine-vision system using two cameras aimed at the nozzle of the 3D printer. The system shines light at material as it is deposited and, based on how much light passes through, calculates the material’s thickness.

“You can think of the vision system as a set of eyes watching the process in real-time,” Foshey says.

The controller would then process images it receives from the vision system and, based on any error it sees, adjust the feed rate and the direction of the printer.

But training a neural network-based controller to understand this manufacturing process is data-intensive, and would require making millions of prints. So, the researchers built a simulator instead.

Successful simulation

To train their controller, they used a process known as reinforcement learning in which the model learns through trial-and-error with a reward. The model was tasked with selecting printing parameters that would create a certain object in a simulated environment. After being shown the expected output, the model was rewarded when the parameters it chose minimized the error between its print and the expected outcome.

After being shown the expected output, the model was rewarded when the parameters it chose minimized the error between its print and the expected outcome.

In this case, an “error” means the model either dispensed too much material, placing it in areas that should have been left open, or did not dispense enough, leaving open spots that should be filled in. As the model performed more simulated prints, it updated its control policy to maximize the reward, becoming more and more accurate.

However, the real world is messier than a simulation. In practice, conditions typically change due to slight variations or noise in the printing process. So the researchers created a numerical model that approximates noise from the 3D printer. They used this model to add noise to the simulation, which led to more realistic results.

“The interesting thing we found was that, by implementing this noise model, we were able to transfer the control policy that was purely trained in simulation onto hardware without training with any physical experimentation,” Foshey says. “We didn’t need to do any fine-tuning on the actual equipment afterwards.”

“We didn’t need to do any fine-tuning on the actual equipment afterwards.”

When they tested the controller, it printed objects more accurately than any other control method they evaluated. It performed especially well at infill printing, which is printing the interior of an object. Some other controllers deposited so much material that the printed object bulged up, but the researchers’ controller adjusted the printing path so the object stayed level.

Their control policy can even learn how materials spread after being deposited and adjust parameters accordingly.

“We were also able to design control policies that could control for different types of materials on-the-fly. So if you had a manufacturing process out in the field and you wanted to change the material, you wouldn’t have to revalidate the manufacturing process. You could just load the new material and the controller would automatically adjust,” Foshey says.

Now that they have shown the effectiveness of this technique for 3D printing, the researchers want to develop controllers for other manufacturing processes. They’d also like to see how the approach can be modified for scenarios where there are multiple layers of material, or multiple materials being printed at once. In addition, their approach assumed each material has a fixed viscosity (“syrupiness”), but a future iteration could use AI to recognize and adjust for viscosity in real-time.

They’d also like to see how the approach can be modified for scenarios where there are multiple layers of material, or multiple materials being printed at once. In addition, their approach assumed each material has a fixed viscosity (“syrupiness”), but a future iteration could use AI to recognize and adjust for viscosity in real-time.

Additional co-authors on this work include Vahid Babaei, who leads the Artificial Intelligence Aided Design and Manufacturing Group at the Max Planck Institute; Piotr Didyk, associate professor at the University of Lugano in Switzerland; Szymon Rusinkiewicz, the David M. Siegel ’83 Professor of computer science at Princeton University; and Bernd Bickel, professor at the Institute of Science and Technology in Austria.

The work was supported, in part, by the FWF Lise-Meitner program, a European Research Council starting grant, and the U.S. National Science Foundation.

MIT Researchers Using Artificial Intelligence For 3D Printing New Materials

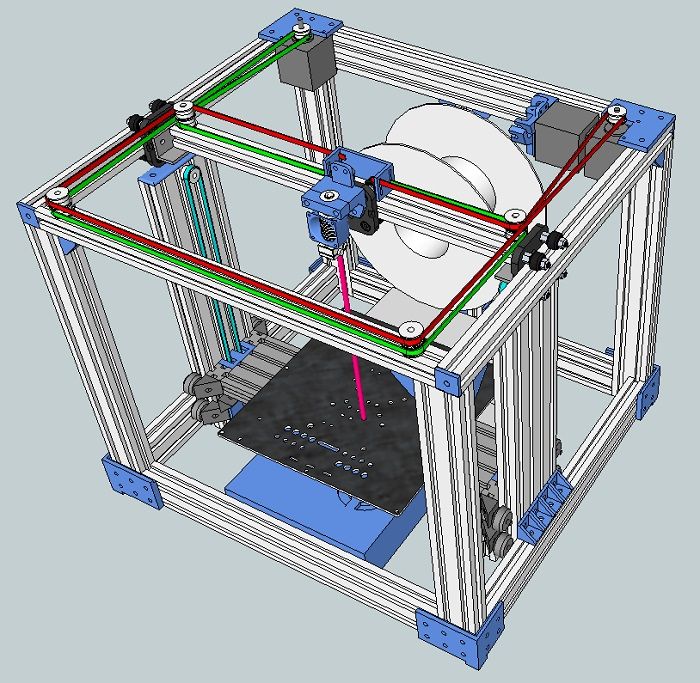

Above: MIT researchers have trained a machine-learning model to monitor and adjust the 3D printing process in real-time/Source: MIT News OfficeScientists and engineers are continuously creating new materials with unique qualities that can be utilised for 3D printing, but figuring out how to print with these materials can be a difficult and expensive task. MIT researchers identified this crucial problem and to solve it, they are using artificial intelligence for 3D printing with new materials.

MIT researchers identified this crucial problem and to solve it, they are using artificial intelligence for 3D printing with new materials.

Often, a skilled operator must rely on manual trial-and-error — potentially hundreds of prints — to discover perfect conditions for printing a novel material effectively. These parameters include printing speed and the amount of material deposited by the printer.

Mike Foshey, a mechanical engineer and project manager at the CDFG, and Michal Piovarci, a postdoc at Austria’s Institute of Science and Technology, are the study’s co-lead authors. Jie Xu, an electrical engineering and computer science graduate student at MIT, and Timothy Erps, a former CDFG technical associate, are among the co-authors of the research.

Contents

- 1 Artificial Intelligence for 3D Printing with New Materials

- 2 Picking parameters

- 3 Successful simulation

- 4 What Next?

Artificial Intelligence for 3D Printing with New Materials

This method has now been streamlined by MIT researchers using artificial intelligence. They created a machine-learning system that uses computer vision to monitor the manufacturing process and fix faults in how the material is handled in real time.

They created a machine-learning system that uses computer vision to monitor the manufacturing process and fix faults in how the material is handled in real time.

They utilised simulations to train a neural network to modify printing parameters to reduce error, and then they applied that controller to a real 3D printer. Their system created items more precisely than any other 3D printing controller they tested.

Above: Closed-loop control of direct ink writing via reinforced learning/Source: Michal Piovarči

The research avoids the excessively expensive procedure of printing thousands or millions of real-world objects in order to train the neural network. It may also make it easier for engineers to include novel materials into their prints, allowing them to create objects with unique electrical or chemical properties. It could also assist technicians in making on-the-fly changes to the printing process if material or ambient circumstances change unexpectedly.

It could also assist technicians in making on-the-fly changes to the printing process if material or ambient circumstances change unexpectedly.

Senior author Wojciech Matusik, professor of electrical engineering and computer science at MIT who leads the Computational Design and Fabrication Group (CDFG) within the Computer Science and Artificial Intelligence Laboratory (CSAIL) said, “This project is really the first demonstration of building a manufacturing system that uses machine learning to learn a complex control policy. If you have manufacturing machines that are more intelligent, they can adapt to the changing environment in the workplace in real-time, to improve the yields or the accuracy of the system. You can squeeze more out of the machine.”

Picking parameters

Determining the ideal parameters of a digital manufacturing process can be one of the most expensive parts of the process because so much trial-and-error is required.

They created a machine-vision system with two cameras targeted at the 3D printer’s nozzle for this purpose. The technology beams light at the material as it is deposited and estimates the thickness based on how much light passes through.

The technology beams light at the material as it is deposited and estimates the thickness based on how much light passes through.

The controller would then analyse the images received from the vision system and, based on any errors detected, modify the feed rate and printer direction.

“You can think of the vision system as a set of eyes watching the process in real-time,” Foshey said.

However, training a neural network-based controller to understand this manufacturing process is time-consuming and would necessitate millions of prints. Instead, the researchers created a simulator.

Successful simulation

They used a process known as reinforcement learning to train their controller, in which the model learns through trial-and-error with a reward. The model was tasked with selecting printing parameters that would create a specific object in a simulated environment, and it was rewarded when the parameters minimised the error between the print and the expected result.

An “error” in this case means that the model either dispensed too much material, filling in areas that should have been left open, or did not dispense enough, leaving open spots that should be filled in. The researchers were aware that in the real world, conditions frequently change as a result of minor variations or noise in the printing process. As a result, the researchers developed a numerical model that approximates 3D printer noise. This model was used to add noise to the simulation, resulting in more realistic results.

Foshey added, “The interesting thing we found was that, by implementing this noise model, we were able to transfer the control policy that was purely trained in simulation onto hardware without training with any physical experimentation. We didn’t need to do any fine-tuning on the actual equipment afterwards.”

When they tested the controller, they discovered that it printed objects more accurately than any other control method they tried.

What Next?

The researchers want to develop controllers for other manufacturing processes now that they have demonstrated the effectiveness of this technique for 3D printing. They’d also like to see how the approach can be modified for scenarios involving multiple layers of material or the printing of multiple materials at the same time. Furthermore, they assumed that each material has a fixed viscosity (“syrupiness”), but a future iteration could use AI to recognise and adjust for viscosity in real-time.

They’d also like to see how the approach can be modified for scenarios involving multiple layers of material or the printing of multiple materials at the same time. Furthermore, they assumed that each material has a fixed viscosity (“syrupiness”), but a future iteration could use AI to recognise and adjust for viscosity in real-time.

Vahid Babaei, who leads the Max Planck Institute’s Artificial Intelligence Aided Design and Manufacturing Group; Piotr Didyk, associate professor at the University of Lugano in Switzerland; Szymon Rusinkiewicz, the David M. Siegel ’83 Professor of computer science at Princeton University; and Bernd Bickel, professor at the Institute of Science and Technology in Austria, are also co-authors on this paper.

The research was funded in part by the FWF Lise-Meitner programme, a European Research Council starting grant, and the National Science Foundation of the United States.

About Manufactur3D Magazine: Manufactur3D is an online magazine on 3D Printing. Visit our Tech News page for more updates on Global 3D Printing News. To stay up-to-date about the latest happenings in the 3D printing world, like us on Facebook or follow us on LinkedIn and Twitter.

Visit our Tech News page for more updates on Global 3D Printing News. To stay up-to-date about the latest happenings in the 3D printing world, like us on Facebook or follow us on LinkedIn and Twitter.

Dimension, or why 3D printers and artificial intelligence won't take over the world / Sudo Null IT News I am not a cynic at all - I am genuinely delighted with what modern technology is capable of. But when I hear the confidently visionary “3D printers will change our lives - after all, it will be possible to print anything on them right at home” or “in 5-10-15 years, artificial intelligence will be equal to a person ...” my cheekbones are bewildered.

What about the dimension? Dimensionality ... it spoils everything.

About bad infinity and definitions

Guinea pig. Firstly, not a sea, and secondly, not a pig ...

Do you remember at school geometry lessons? How many points are in the segment? Endlessly. How many segments are in a square? Also infinite. How many dots are there in a square? What, infinity again? This is already some kind of bad infinity, infinity of the second order ... thank God in the real world, in order to draw a tolerable segment, it is not at all necessary to draw points endlessly - a qualitative leap occurs rather quickly - and a set of points subjectively turns into a completely tolerable line.

How many segments are in a square? Also infinite. How many dots are there in a square? What, infinity again? This is already some kind of bad infinity, infinity of the second order ... thank God in the real world, in order to draw a tolerable segment, it is not at all necessary to draw points endlessly - a qualitative leap occurs rather quickly - and a set of points subjectively turns into a completely tolerable line.

But in order to draw a square, we will have to make a qualitative leap again - after all, no matter how many points you stuff into a segment, it will not become a square. Each new dimension requires its own qualitative transition.

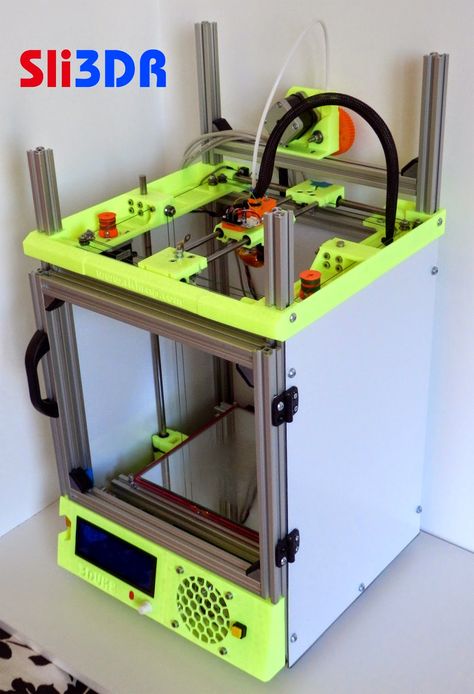

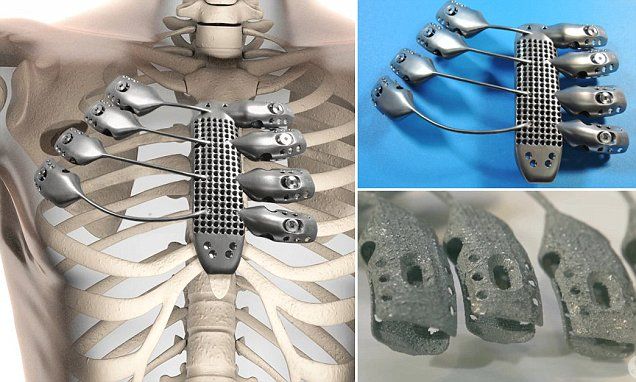

The confusion, as always, begins with incorrect definitions - a 3D printer is not a printer at all (it does not make any "prints" and images), and in general, it is not 3D. If we simplify the operation of the printer, then it most of all resembles a “concrete pourer” with which modern houses are built. It crawls back and forth, squeezing out the sausages of the congealing mass.

About complexity and usefulness

Dad, who will take whom - an elephant's whale or a whale's elephant?

Here and there - these are two whole dimensions that require high-quality transitions - the ability to move very discretely along two axes. In this sense, 3D is no better than the regular Laserjeta. But unlike a banal printer, it can move in another dimension - up and down. This truth does not mean at all that the printer can print an arbitrary point in space (evil gravity does not allow). So far, each element of the printed structure must be securely attached to the footboard, so that the third dimension is rather experimental and nothing worthwhile comes out without finalizing the resulting product with a file. If you don’t believe me, try printing a baby rattle and tell us how you did it.

It would seem that a 3D printer should logically be an order of magnitude more complicated than a color photo printer. But nothing like that - a regular color printer has at least three more color space dimensions. And that gives it the versatility you need, allowing you to print almost any image. The path from the first black-and-white printer to a half-color printer took a modest 30 years, and this despite the fact that the printing theory and color theory are well developed. A 3D printer as a printing device is an order of magnitude SIMPLE than a good color photo printer. And the only reason why it did not appear much earlier is its economic inexpediency.

And that gives it the versatility you need, allowing you to print almost any image. The path from the first black-and-white printer to a half-color printer took a modest 30 years, and this despite the fact that the printing theory and color theory are well developed. A 3D printer as a printing device is an order of magnitude SIMPLE than a good color photo printer. And the only reason why it did not appear much earlier is its economic inexpediency.

About dimension

What do you want to be smart or beautiful?

How far does a printer need to travel to take over the world? So that it really becomes possible to print the necessary things on it. Let's throw in the dimensions that are in the world of real things. I understand that the list is not exact, but even offhand:

- Hardness (soft - hard)

- Density (light - heavy)

- Plasticity (ductile - elastic)

- Electrical conductivity (dielectric - conductor)

- Shade (red-violet)

- Saturation (colorless - color)

- Brightness (or rather reflectivity) (black - bright)

- Thermal conductivity (I can’t even pick up words)

- Reactivity (inert - active)

- …

And if, for example, you decide to print food, then add more edibility, humidity, acidity, salinity, bitterness, meatiness . ... And we haven't touched on the flavor...

... And we haven't touched on the flavor...

About life

People always vulgarize the most beautiful ideas

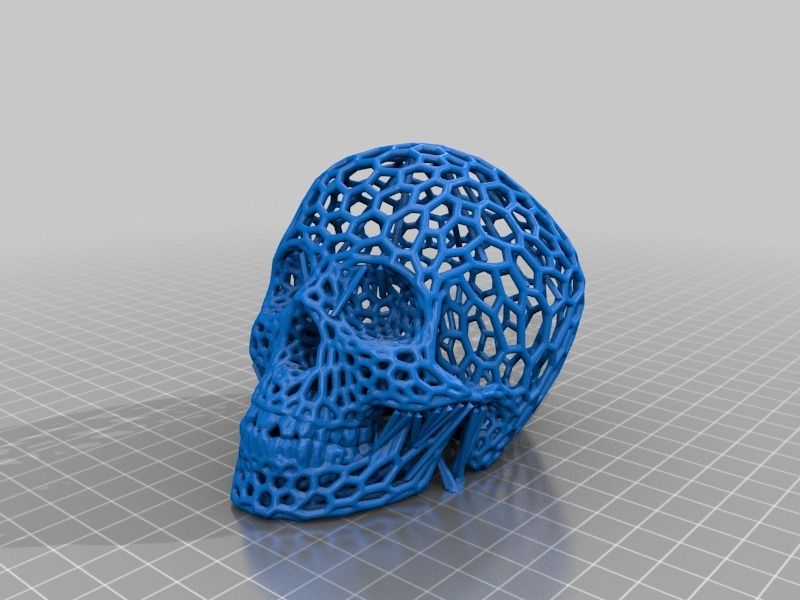

Imagine - Saturday morning. Children invite you to play table tennis with them. You quickly change into a tracksuit, grab a net and rackets - but the trouble is, the dog ate all the balls. Run to the store? Nonsense, because you have a 3D printer! All you need to do is print a couple of balloons. The simplest elastic light white almost perfect sphere, this is not a heavy hard "something hedron" with a cool logo ... (oh, yes, it's a golf ball)

Five minutes of searching on the Internet and you have already downloaded a great model. Another couple of minutes the printer hums, processing the order - and about the miracle of technology! You have a crooked monster in your hands that does not jump well and weighs like a sinker...

Thank God that retrograde children have ordered everything in the online store and the delivery drone is already carefully unloading the package in the center of the lawn. ..

..

About progress

At a time when spaceships...

The thing is that your printer must have high resolution in every dimension, so high that the quantity grows into quality. I have no idea how this problem will be solved - the only thing that comes to my mind is the creation of a material with desired chemical and physical properties at the required point in space (a common thing for printers of the Demiurg-2200 series - after all, a two-megawatt thermonuclear power source and electronic tunneling manipulator "Yahva Inside" on board)

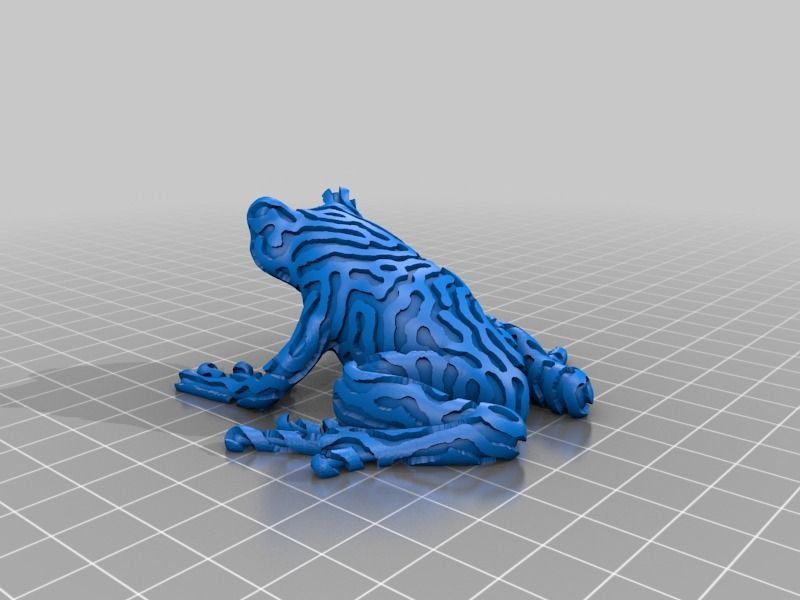

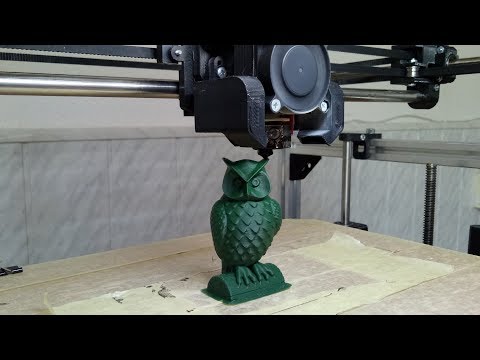

I am an optimist and I believe that one day it will be so. But for now, we are left to admire the technological progress, watching how the sausages of molten plastic fall onto the substrate with jeweler's precision.

PS

So what about artificial intelligence? And all the same dimension... The current mind is 7 billion individuals, 500 million years of evolution, billions of neurons. And each of them has thousands of arbitrary connections (the three-dimensional dimension of a three-dimensional brain, instead of a one-dimensional code of zeros and ones). And through all this richness run signals of many types, processed and interpreted differently in different chemical environments (oh those pranksters mu-opiates, endorphins and oxytocins ...) So, do not expect real intelligence anytime soon. Although who knows, where is the subjective border of the transition of quantity into quality ...

And each of them has thousands of arbitrary connections (the three-dimensional dimension of a three-dimensional brain, instead of a one-dimensional code of zeros and ones). And through all this richness run signals of many types, processed and interpreted differently in different chemical environments (oh those pranksters mu-opiates, endorphins and oxytocins ...) So, do not expect real intelligence anytime soon. Although who knows, where is the subjective border of the transition of quantity into quality ...

Artificial intelligence in 3D printing / 3d printing / 3Dmag.org

Researchers at Michigan Technological University have developed an open source AI-based algorithm capable of detecting and correcting 3D printing errors.

How does it work? A plate with a visual marker is placed on the printing platform, which clearly indicates where the model will be reproduced. Using just one camera, the algorithm compares the digital coordinates from the STL file with the corresponding coordinates of the resulting model in the real world.

A digital 3D copy of the model is created in space (similar to AR), which later serves as a reference for comparison. The software then has the ability to generate whatever printer actions are needed to improve the reliability and success rates of 3D printing. The algorithm is FFF focused and ultimately designed to save time and thread.

Initial testing was done on a delta RepRap 3D printer with PLA filament. And although the results were promising, scientists still have work to do. The algorithm is able to consistently detect failures caused by under or over extrusion, but the correction mechanisms still need to be improved. In its current form, the researchers see this work as an intelligent tool for stopping printing.

Related entries:

-

hedin

3d software → What's New in Autodesk Fusion 360 0 - Learn more